Accelerated Computing

Author: Shashi Prakash Agarwal

What It Is and How It Works

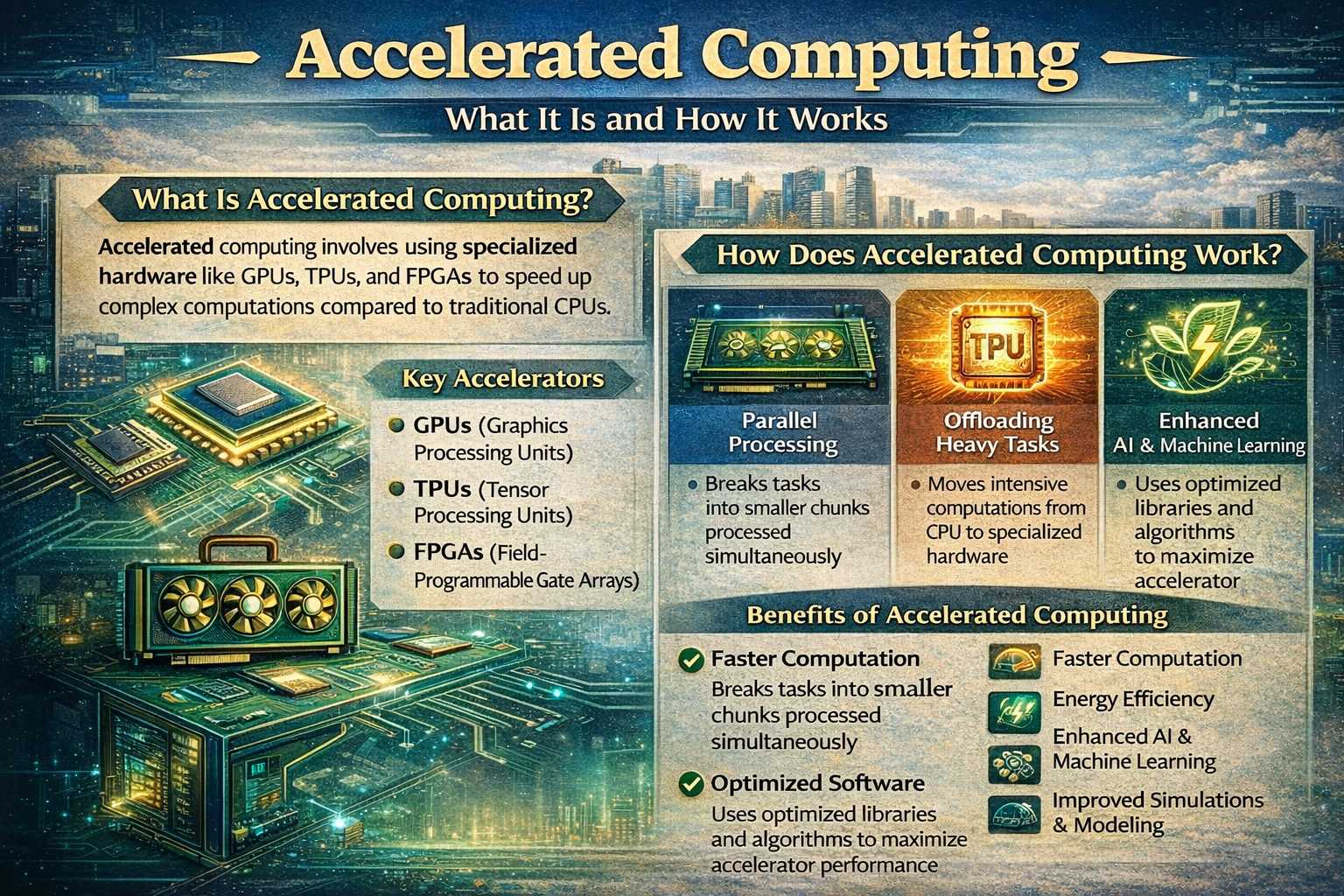

Accelerated computing has become a foundational technology behind many of today’s most powerful digital systems. As artificial intelligence, large-scale data analysis, and real-time simulations continue to expand, traditional computing methods are often no longer sufficient. Accelerated computing addresses this gap by using specialized hardware to dramatically increase processing speed and efficiency. Rather than making computers simply “faster,” accelerated computing changes how work is done inside a system by assigning specific tasks to hardware designed to handle them best.

Understanding Accelerated Computing

Accelerated computing refers to the use of specialized processors alongside a traditional central processing unit (CPU) to perform certain workloads more efficiently. These processors, commonly called accelerators, include graphics processing units (GPUs), tensor processing units (TPUs), and field-programmable gate arrays (FPGAs). In a conventional system, the CPU is responsible for nearly all computation. While CPUs are flexible and powerful, they are designed for general-purpose tasks. Accelerated computing shifts highly repetitive or parallel workloads away from the CPU and onto accelerators that are optimized for those operations. GPUs excel at performing thousands of calculations simultaneously, making them ideal for graphics rendering, machine learning, and scientific simulations. TPUs are optimized specifically for neural network operations and artificial intelligence workloads. FPGAs can be reconfigured for specialized tasks such as video encoding, encryption, or financial modeling. Together, these accelerators allow systems to handle complex workloads faster and more efficiently than CPUs alone. Why Accelerated Computing Matters Modern workloads are increasingly specialized. Training large language models, simulating climate systems, modeling protein structures for drug discovery, and enabling autonomous vehicles all require massive computational power. Attempting to run these workloads on standard CPUs would be slow, expensive, and energy-intensive. Accelerated computing makes these tasks feasible at scale. By matching workloads to hardware designed for them, systems can process data faster while consuming less energy per calculation. This improvement in energy efficiency is critical, particularly for data centers that operate at enormous scale and face growing power constraints. The impact of accelerated computing extends across industries. In healthcare, it enables faster medical imaging analysis and drug discovery simulations. In transportation, it allows autonomous vehicles to interpret sensor data in real time. In climate science, it supports high-resolution modeling that improves forecasting accuracy. In each case, accelerated computing shortens timelines that would otherwise stretch from days into weeks or months.

How Accelerated Computing Works in Practice

In an accelerated system, the CPU acts as a coordinator rather than the sole worker. It manages tasks, handles system logic, and delegates compute-intensive operations to accelerators. These accelerators then process the data in parallel or through specialized circuits, returning results to the CPU. This division of labor allows systems to scale efficiently. Instead of upgrading CPUs endlessly, organizations can add or upgrade accelerators to meet growing computational demands. This approach also allows developers to optimize performance by tailoring workloads to the most appropriate hardware. Software plays a crucial role in making this possible. Programming frameworks and libraries are designed to detect available accelerators and route workloads accordingly. This abstraction allows developers to benefit from acceleration without needing to manage hardware complexity directly.

Accessing Accelerated Computing

Accelerated computing is no longer limited to research labs or large technology firms. Cloud platforms now make it accessible to businesses of all sizes by offering on-demand access to GPU- and accelerator-backed infrastructure. Cloud-based acceleration allows startups, researchers, and enterprises to run advanced workloads without purchasing and maintaining expensive hardware. This flexibility is especially valuable for workloads that are compute-intensive but intermittent, such as simulations, training machine learning models, or large-scale data processing. On the software side, widely used frameworks such as CUDA, TensorFlow, and PyTorch are built to leverage accelerators automatically. These tools allow developers to take advantage of accelerated hardware with minimal changes to existing workflows, significantly reducing development time and cost.

Accelerated Computing and the Future of Technology

Accelerated computing is shaping the future of digital infrastructure. As artificial intelligence models grow larger and data volumes continue to expand, demand for specialized compute will only increase. The ability to process information faster and more efficiently has become a strategic advantage, not just a technical one. Geopolitically and economically, control over advanced computing hardware and supply chains is becoming increasingly important. Nations and companies alike view accelerated computing as critical infrastructure, comparable to energy or transportation networks. From an investment and innovation perspective, accelerated computing underpins progress across semiconductors, cloud services, artificial intelligence, robotics, and autonomous systems. Its influence is broad, structural, and long-term.

Final Thoughts

Accelerated computing is not about making computers marginally faster. It represents a fundamental shift in how computational work is distributed and executed. By pairing general-purpose CPUs with specialized accelerators, modern systems can tackle problems that were previously impractical or impossible. As workloads continue to evolve and computational demands intensify, accelerated computing will remain a central pillar of technological advancement. Understanding how it works and why it matters provides valuable insight into the future of artificial intelligence, scientific discovery, and digital infrastructure.